Introduction

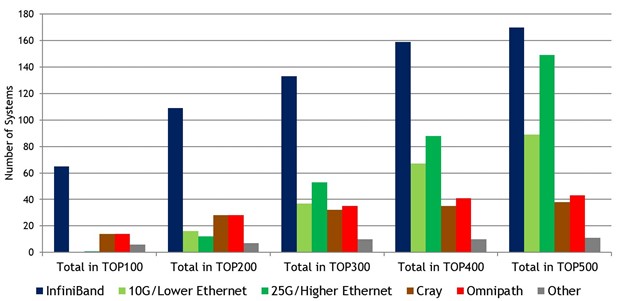

If you are in the data center industry, you may have heard the term InfiniBand bandied about. If you search the web, you will see scores of articles on the imminent death of InfiniBand. But data proves otherwise. InfiniBand is alive and kicking and according to Top500.org, powers 65% of the top 100 HPC platforms in the world. See chart below from their June 2021 report.

InfiniBand Overview

InfiniBand is a network standard that specifies an input/output architecture interconnecting servers and other communications infrastructure equipment. It is designed to be scalable and uses a switched fabric network architecture. Featuring low latency and high bandwidth, InfiniBand requires minimal processing overhead and is ideal for transporting multiple traffic types (clustering, communications, storage, management) over a single connection. InfiniBand technology is promoted by IBTA. Currently, Mellanox/NVIDIA is one of the few suppliers of InfiniBand switches and hardware.

InfiniBand Advantages

InfiniBand offers several key advantages. It is a full-transport offload network, which means that all network operations are managed by the network and not by the CPU. It is a very efficient network standard with the ability to transport more data with less overhead. InfiniBand also has much lower latency than Ethernet and, most importantly, it incorporates processing engines inside the network that accelerate data processing for high- performance computing. These key technology advantages make InfiniBand an ideal option for any compute-and data-intensive application.

• Low latency with measured delays of 1µs (microsecond) end-to-end

• High performance

• Data integrity through CRCs (cyclic redundancy checks) across the fabric to ensure data is correctly transferred

• High efficiency with direct support for Remote Direct Memory Access (RDMA) and other advanced transport protocols

Even though InfiniBand is primarily deployed for high performance computing applications, it is also being adopted by latency-sensitive industries like banking and trading. Hyperscalers like Microsoft and Amazon leverage InfiniBand for AI and machine learning applications on cloud computing platforms.

Our InfiniBand solutions

We offer AOCs (Active Optical Cables) designed exclusively for InfiniBand. A critical differentiating factor between ethernet and InfiniBand AOCs is the Bit error rate. We provide InfiniBand capable AOCs with Pre-FEC maximum BER of 5E-8, far better than traditional ethernet AOCs (2.4E-4). Another benefit of our InfiniBand AOCs is the cost. Our InfiniBand capable AOCs are almost on par with ethernet AOCs cost-wise and a fraction of the cost of the big guys’. Our DSP-free AOCs provide the added benefit of low power consumption. About 20% lower power consumption is achieved with an analog CDR chipset, as opposed to a DSP-based one. Analog CDR reduces latency 5 times less than DSP which is a key driving feature for high frequent data traffic at the server in HPC and AI applications.

Contact us at info@vitextech.com to learn more about our InfiniBand ready AOCs.

Related Products